This past week marked the 2 year anniversary of Reclaim Hosting and what started as something of an experiment has turned into a successful business and one of the most rewarding things I've been a part of in my professional career. We've come a long way in 2 years but we're learning everyday and one thing on my ever-growing bucket list of bugs and improvements has been our website. Not the design mind you (though I do have lots of plans to give that a makeover), rather the architecture and hosting of it.

When we started the company we had just one lonely server for people's sites and that included our own. Today I manage many servers but in part out of laziness I never did move our site off the same shared hosting server our customers were on. I could justify this for awhile because it's not like we get a ton of traffic (though we're not small), but also I felt at the time it was a good gauge for our service if we were on the same system everyone else was using. Alas, I started to see holes in that methodology when people who had various issues with accessing their account be it firewall issues or otherwise would also no longer be able to get to our site. More and more it seemed like reclaimhosting.com should always be up and always be available regardless of the status of any given server we manage.

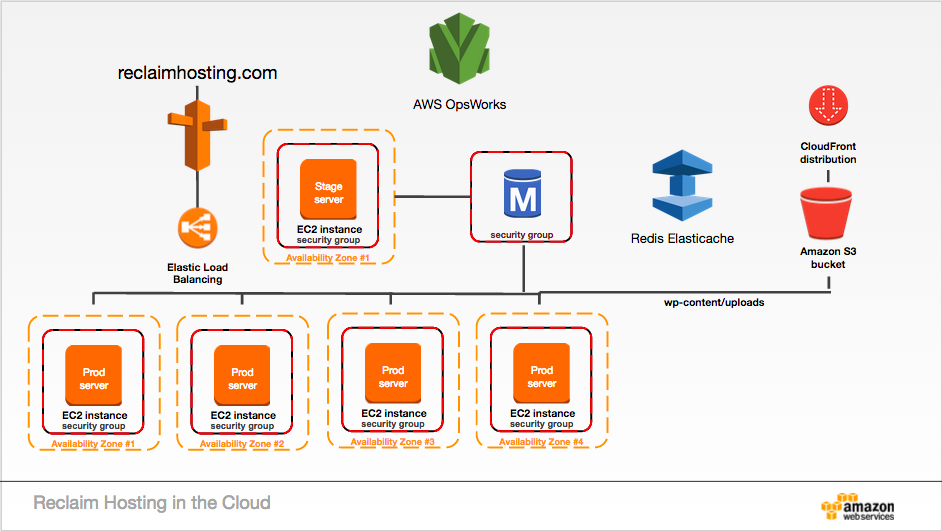

Meanwhile for the past year I've dug more and more into Amazon Web Services for server architecture. I helped UMW move UMW Blogs to AWS last summer and in fact the DNS for reclaimhosting.com has been running through Route53 for at least a year now. With a few recent projects being candidates for a multi-layered cloud approach with AWS I thought it was high time we moved the main website for Reclaim Hosting up there as a proof-of-concept and best-practice example of running WordPress in the cloud.

I started with the video below (the audio for it is incredibly quiet but it's worth it) which helped me figure out a few neat tricks for caching, staging environments, and other things I hadn't yet experimented with. The author also shares a lot of information in the notes that point to his GitHub repo for the project.

Today I flipped the DNS and at this point I believe the site is now resolving for most folks on the new setup. Here's a quick laundry list of some of the items at play with our new setup for reclaimhosting.com:

- A single EC2 server serves as a staging environment that utilizes the same database as production servers.

- The staging environment changes are committed to a private repo on GitHub (mostly just plugin and theme modifications since the database stores most all content)

- Our database is using a Multi-AZ (Availability Zone) RDS instance for high availability.

- We have an Elastic Load Balancer that is receiving the requests to the site and sending them to production servers.

- OpsWorks is setup to deploy changes from the git repo to 4 production EC2 servers.

- Each production server is located in a separate datacenter for high availability.

- All uploads are sent to S3 storage and served on the website using the CloudFront CDN for faster response times globally.

- A Redis object cache stores objects across all production servers in memory on an ElastiCache instance.

The new setup also has me digging a bit into Chef which is used in the deployment process by OpsWorks but not something I previously had any experience with. The upgraded setup also comes with a lot of security enhancements. Logins are only available via white-listed IP to our staging environment and wp-login.php is completely removed during deployment so it's virtually impossible for someone to get in to our site. Security groups (essentially firewalls) are configured in a way that each layer only has the necessary ports open to the necessary servers accessing them. For example the database can only be accessed by the running EC2 servers, the production servers will only access ports 80 and 443 (http/https) from the load balancer, and staging is only accessible via SSH with a private key.

I started out with T2-Micro instances across everything (the cheapest Amazon offers), bringing the total cost of a setup like this to around $60/month running 24/7 with 5 servers, a production database, an in-memory cache instance, and ~1GB of data stored in S3 with CDN caching. At the moment performance seems to be pretty great considering the small size of the production servers.

This is no doubt an incredibly complex environment and not something I would recommend anyone tackle for their personal sites, but for large production instances of WordPress used by your campus or sites that absolutely need to be online at all times and highly-available globally? Absolutely! And heck, if you're unsure how you might accomplish the same hire Reclaim Hosting to help you get setup or host it all for you in the cloud!