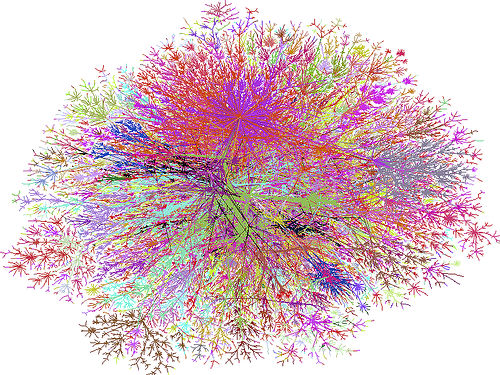

I'm straying a bit from business as usual here on the blog because I got to thinking about the transient web again. During my midday flight through Google Reader I came across some news that the BBC would be removing any websites sitting in the home folder of its server. Although the practice of creating whole sites on the root of the same web address was misguided, this body of work now represents 172 websites. In a followup post, Jeremy Keith talks a bit more about "linkrot", the idea that when you close a site not only is it a shame that the site is gone, but a vast number of links from all over the web go dark. They don't disappear like the sites they represent, they live on pointing to a dead space. An argument could be made that, putting on my Hyperbole Hat, when you create work online and then later remove that work, you are breaking the internet in small but meaningful ways. [caption id="" align="alignnone" width="500" caption="Source: http://www.flickr.com/photos/44124348109@N01/916142"] [/caption] Now I've written before that this is all a part of the way the current web is built and perhaps our human desire to preserve our past is clouding our ability to see this new environment we're in. Indeed already it seems we have groups of people willing to archive and serve up these websites that the BBC is planning on closing. The Internet Archive continues to live on and show us small glimpses of the web as it were. But the real problem remains, when content is removed and that link is dead, that is the end of the road for most people. Who, upon finding a site is down or closed, begins searching the web for a possible mirror served up by a few throatbeards in San Francisco with spare server space? Certainly not the vast majority, not your mom or dad or neighbor for sure. But, assuming this is a problem we need to fix (and I actually do believe it is a problem worthy of a solution despite my rants), what is the solution? I see it in 2 parts:

[/caption] Now I've written before that this is all a part of the way the current web is built and perhaps our human desire to preserve our past is clouding our ability to see this new environment we're in. Indeed already it seems we have groups of people willing to archive and serve up these websites that the BBC is planning on closing. The Internet Archive continues to live on and show us small glimpses of the web as it were. But the real problem remains, when content is removed and that link is dead, that is the end of the road for most people. Who, upon finding a site is down or closed, begins searching the web for a possible mirror served up by a few throatbeards in San Francisco with spare server space? Certainly not the vast majority, not your mom or dad or neighbor for sure. But, assuming this is a problem we need to fix (and I actually do believe it is a problem worthy of a solution despite my rants), what is the solution? I see it in 2 parts:

The Internet Archive

We have a good head start on the first part of the problem, scraping websites and storing their contents as a piece of history. The Internet Archive is by no means perfect, but it's already functional. Improving on this would be the simple issue of scale. Storage is cheap and we have the technology, so the project would just have to continue to grow and build. Right now most of the archiving happens on a semi-regular basis, however some websites may not get spidered more than once in a 2-3 month period. The internet is moving too fast for that. The goal would be to have an archive functioning that could take daily snapshots of 90% of the web, mirror the databases, and continue to keep core services of these sites running offline. That means when Engadget goes down I can go back to the January 22nd 2009 version and not only read articles but see the comments that were left and "like" an article through Facebook. It's a big and lofty goal, but those are the best kind. But as I said, this is only one piece of the puzzle. ### Global URLs

For anyone who has carved out their own space on the web, this process is very familiar. Register a domain, add some hosting, install blogging software of choice (or go the hosted route with a service like Tumblr, SquareSpace, etc), and start putting your content out there. Every item you publish is created with a link to yourdomain.com/item. If you're on Twitter you're probably dropping shorturls left and right as well. That's a whole 'nother beast that I'm not going to pretend to tackle, but one that could possibly be worked into this solution. So the problem here is that when you decide you no longer want to pay for hosting, the domain, or any other portion of the process, your site goes dark. If we have our Super Magical Internet Archive up and running that's no a problem, we have the content, but everyone who bookmarked it or pointed to it from their websites now has broken links. The solution I'm imagining is some sort of central database that serves up and routes links to a from browsers. I'd imagine this would require a fundamental change to how the internet as we know it works. Right now going to archive.timmmmyboy.com means hitting my servers and seeing what's available. In this situation all links would hit a central server first how would do a bit of processing to show you either a live version of the site, or if they hit a 404, redirect, or denied request they would serve the most recent archive. Perhaps extra code on the end of a global url could tell this central database exactly what you wanted. So "Show me the most current site, but only if it's the same site that was being served 2 years ago" would be a small string of code on the end of the URL, ensuring that when you go to a website you're seeing the same one that was being run 2 years ago and not someone who bought a domain and made a new site. Again, this seems like a lot of work, a fundamental change to what happens when we click a link. But I believe it would be change for the better. In a world with extremely cheap storage that gets cheaper every day, and at the rate technology improves, we should be able to make linkrot history. The idea that when you click a link you would expect anything other than the exact content you were expecting, even 100 years after the fact, should be crazy. We should expect this to work. That's the future of the web, a move from this transient changing place, to one that also archives and serves up the historical web.